Summary:

In this article we will learn about Windows Hypervisor Platform – Hype-v and Virtual Machine Platform components

Applies to Windows Operating System

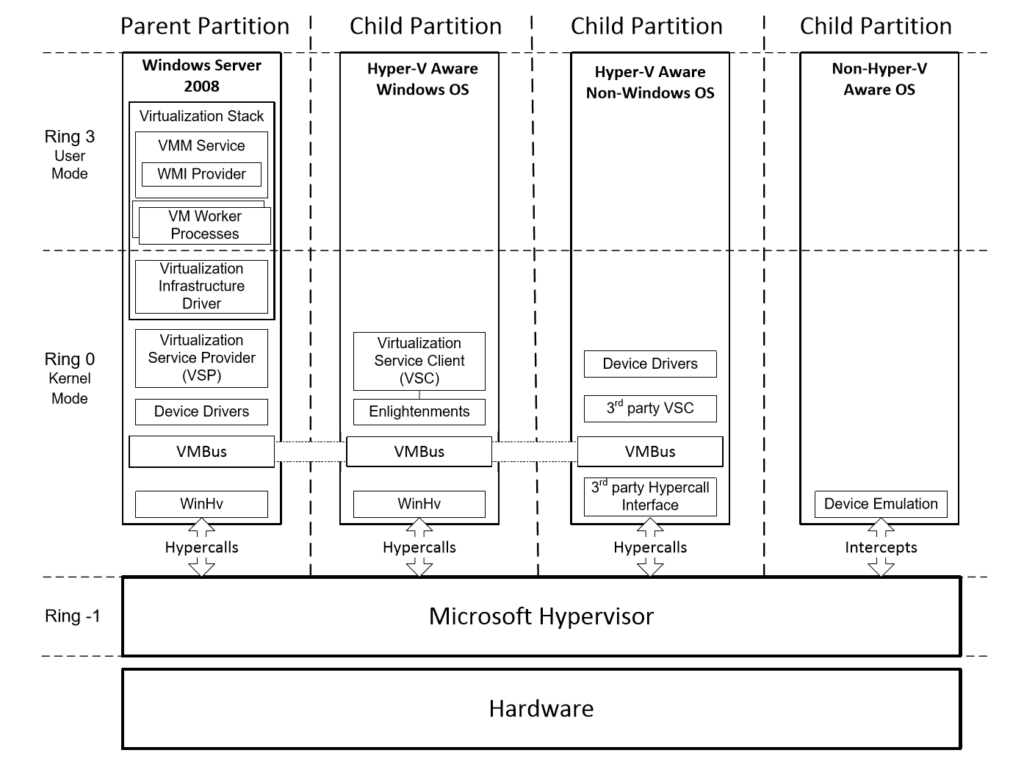

Windows Hypervisor Platform – Hyper-v Architecture

Ring -1 and Ring 0

Intel-VT and AMD-V processors with 4 Ring Architecture provide Hypervisor addition level, RING -1, for executing critical operation (Requires Hypervisor to interact with Hardware)

This allows a virtual guest operating system kernel to run at Ring 0 and invoke the Hypervisor in Ring -1 for critical operation with less overhead (Hypervisor running in Ring -1 can control components running in Ring 0). Implements Extended Page Table and Tagged Translation Lookaside Buffer (TLBs) to supports isolation of Virtual Machine

Hypervisor initialization details are stored in BCD stores (Boot Configuration Database)

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control

Hyper-v Boot Device Driver: Hvboot.sys

Parent and Child Partition

- Hyper-v supports isolation in terms of Partition (Logical isolation unit that are managed by Hypervisor). Hypervisor must have one parent or root partition (and can have multiple child partition)

- Child partition is created from within the Parent Partition

- Virtualization stack runs in the parent partition and has direct access to the hardware devices

- Parent partition creates child partition using Hypercall application programming interface (API)

- Child partition does not have direct access to the physical processor nor they handle processor interrupt. They have virtual view of the processor and runs in a virtual memory address region that is private to each guest partition

- Hypervisor handles interrupts to the processor and re-direct them to the respective partition

- An IOMMU (Input-Output Memory Management Unit) is used to ramp (address translation) physical memory to the addresses that are used by the child partition

- Child partitions does not have direct access to the other hardware resources and are presented a virtual view of the hardware resources as virtual devices (vDevs)

VMBus

- Request to the vDevs are redirected either by VMBus (logical Inter-partition communication channel) or Hypervisor to the device is the parent partition

- Integration Services uses VMBus

- VSPs communicates over the VMBus to handle device access request from child partitions. VSCs running inside each child partition redirect Device access request to the VSPs via VMBus

- Enlightened I/O is specialized virtualization-aware implementation of high level communicating protocol like SCSI to utilize the VMBus directly, bypassing any intermediate device layer (Requires Enlightened Guest that is Hypervisor and VMBus aware)

Hypercalls:

The hypercall interface is an assembly-language interface that partitioned operating system can use to access the hypervisor

Windows Hypervisor Interface Library (WinHv.sys)

The Windows Hypervisor Interface Library (WinHv.sys) is kernel-mode DLL that is loaded within Windows Server instance running in the Parent Partition as well Windows-based virtual machine

WinHv is essentially a bridge between a partitioned operation system’s drivers and hypervisor that allows them to access hypervisor using Hypercalls (All enlightened Windows-based Virtual machine communication with Hypervisor is handled by WinHv.sys except Motherboard-level communication (CPUID instruction for determining processor compatibility))

Cannot be used for Linux Virtual machines

Primary Partition:

The Primary partition is the first partition that is created when the hypervisor is started

Manages following tasks:

- Create and manage other partitions

- Managed and assigning hardware devices

- Power Management, plug and play devices and hardware failure

Parent Partition does not manages CPU scheduling and physical memory allocation (managed by Hypervisor)

The virtualization Stacks consist of following components:

- Virtual Machine Management Service

- Virtual Machine Worker Process

- Virtual Device (vDevs)

- Virtualization Service Provider

- Virtualization Infrastructure Drivers

- Windows Hypervisor Interface Library

Virtual Machine Management Service:

VMMS is responsible for managing the state to all the virtual machines in the Child Partition. It performed the management task for Stopped and Offline virtual machine

Once the virtual machine is online, VMWorker process perform management task for VMs

VMMS comprise of following components:

- WMI Provider

- Virtual Machine Manager

- Worker Process Manager

- Snapshot Manager

- Single port listener for RDP

- Active Directory Service Marker

- VSS Writer

- Cluster Resource Control

- VMMS State management

Virtual Machine WMI Provider

Used to modify the configuration or the state of the virtual devices in the child partition

VMMS act as the primary WMI provider and process virtual machine specific calls for offline (VMMs) and online (Managed by VMWorker process) VMs

VMMS WMI providers includes following tasks:

- Migrate the computer system to another servers

- Taking a snapshot of the computer system

- Properties that reflects state of the computer system (On, off or saved)

- Read only timestamp reflecting when the VM was saved

- Read-only properties that reflect the Current state of an online VMs

Other WMI Providers:

Network Specific WMI provider and Storage specific WMI Provider

Snapshot Manager:

For online VMs, snapshot is taken care by VMWorker process. For offline VMs, snapshot is taken care by Snapshot Manager within VMMS

The following steps are performed when a snapshot is created:

1. Creates a new differencing hard disk image of the current .vhd file as a .avhd file in the location specified.

2. Creates a new snapshot configuration (<GUID>.xml).

3. Copies the virtual machine configuration to the snapshot configuration file.

4. Copies the saved state file (<GUID>.vsv) and attaches it to the snapshot configuration.

5. Creates a <GUID>.bin file, and copies the contents of memory to the file.

Single Port Listener For RDP

The Virtual Machine Connection client (vmconnect.exe) is the application that is launched when a virtual machine connection is made using the Hyper-V Management Console providing window for interacting with a single virtual machine.

The Single Port Listener for RDP in the Virtual Machine Management Service listens for incoming connection requests on TCP port 2179 (883) on the parent partition

The Virtual Machine Connection client application uses the Terminal Services ActiveX control, mstscax.dll (similar to mstsc.exe), to make the connection to TCP port 2179 when it is launched

When the Virtual Machine Connection client application connects to port 2179, the Virtual Machine Management Service routes the communication to the worker process for the specified virtual machine. The worker process utilizes rdp4vs.dll to communicate with the Terminal Services ActiveX control in the Virtual Machine Connection client application.

Cluster Resource Control

The Cluster Resource Control is the Virtual Machine Management Service interface to VMClusres.dll, the user mode component that Windows Server 2008 Failover Clustering uses to create and manage highly available virtual machines.

VMMS State Management

The Virtual Machine Manager Service manages the offline states of all virtual machines and controls the set of operations that are permitted when in a particular state

The following states are managed by the VMMS:

Not Active

Starting

Active

Taking Snapshot

Applying Snapshot

Deleting Snapshot

Merging Disk

For online virtual machine operations, such as Pause, Suspend, and Stop, are managed by the Virtual Machine Worker Process for each running virtual machine.

The Active Directory Service Marker (ADSM)

It is a subcomponent of the Virtual Machine Management Service that provides registration and management of Service Connection Points (SCP) in Active Directory. A SCP is an object published in Active Directory that allows clients to query for data that the service has published in the SCP. SCPs are stored in the global catalog, which makes them searchable across an Active Directory forest.

At every start of the Virtual Machine Management Service, the ADSM attempts to re-register the service connection point. If a valid active directory domain controller cannot be found within the timeout window, an Event ID: 14050

Hyper-V does not require the SCP to be successfully registered to load or to provide virtualization services.

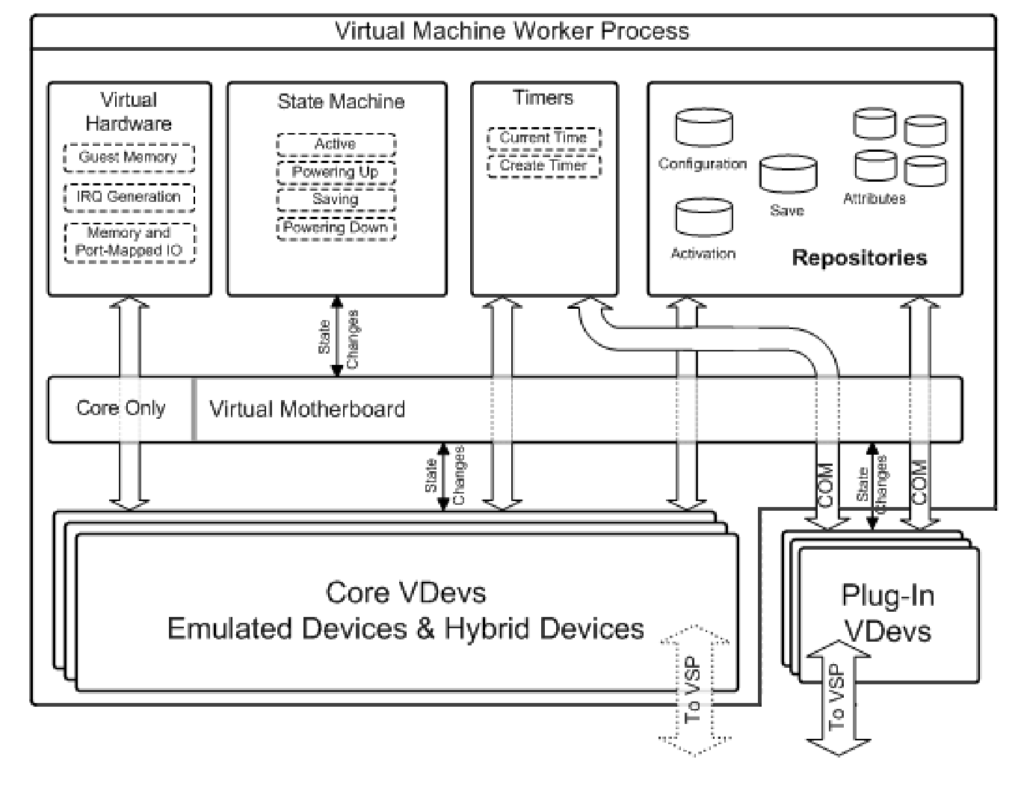

Virtual Machine Worker Process

The Virtual Machine Worker Process, vmwp.exe, is a user mode component that provides virtual machine management services from the Windows Server instance in the parent partition to the guest operating systems in the child partitions.

VM worker process runs under the Network Service account

The worker process provides the following functionality:

- Contains the Virtual Motherboard (VMB) with emulated devices.

- The initial and subsequent configurations of virtual machines.

- The creation and running of a virtual machine.

- Responds to dynamic resource change requests.

- Pausing & resuming virtual machines

- Saving & restoring virtual machines

- Snapshotting of virtual machines

- Provides a user level runtime facility for Virtual Devices (VDevs).

Virtual Hardware

The virtual motherboard exposes the following virtual hardware to virtual machines as separate devices:

- Guest memory

- IRQ generation

- Memory Mapped and Port Mapped IO

This virtual hardware is exposed only to Core VDev

State Machine

The state machine drives the creation of a virtual machine and it is responsible for the runtime state of a virtual machine. It controls all the state transitions of a virtual machine including starting, resetting, migrating and stopping a virtual machine as well as saving state, pausing, and snapshotting.

Timers

Used for creating and maintaining a virtual machine’s current time and other timing functions.

Repositories

Logical storage locations for configuration and runtime information that is associated with a virtual machine

For online virtual machines, this information is maintained by the worker process in conjunction with the Virtual Machine Configuration Module, vsconfig.dll, which is also loaded in-process with the worker process.

The saved-state repository stores its data in the virtual machine’s saved state files, which are the <GUID>.vsv and <GUID>.bin files

Virtual Devices (VDevs)

- A Virtual Device (VDev) is a software module that provides device configuration and control for a child partition and resides in the virtualization stack.

- The virtual motherboard (VMB) is the component responsible for the management of virtual devices, including configuration support, timing services, and device state changes.

- The virtual motherboard includes a basic set of virtual devices including the PCI bus and the chipset level devices

- When a virtual machine is created, configuration services from within the VM Worker Process in the parent partition provide information that instruct the virtual motherboard to identify, initialize, and configure all of the virtual devices

- Virtual devices are one of two types, either Core VDevs or Plug-in VDevs

Core VDev:

- Core VDevs are software modules that model hardware devices and are available to each virtual machine.

- Most of the Core VDevs are also categorized as emulated devices since they emulate a specific hardware device, such as an S3 video card.

- Core VDevs are typically used in situations where compatibility is important because existing software, such as BIOSes, drivers, etc., continue to work without modifications

- Core VDevs are implemented within the Virtual Machine Worker Process to simplify development by allowing the use of existing Virtual Server emulated device code and avoid creating COM interfaces for an existing device infrastructure.

- The emulated device Core VDevs include BIOS, DMA, APIC, ISA Bus, PCI Bus, PIC Device, PIT Device, Power Mgmt device, RTC device, Serial Controller, Speaker device, 8042 PS/2 keyboard/mouse controller, Emulated Ethernet, Floppy controller, IDE Controller, VGA/VESA video

- The only two synthetic device Core VDevs implemented at this time are a Synthetic Video Controller and a Synthetic Human Interface Device (HID) controller. The synthetic video controller and synthetic HID controller are implemented as Core VDevs because they utilize the virtual motherboard.

These synthetic devices are only available to guest operating systems that support Integration Services

Plug-in VDev

- Plug-in VDevs are software modules that do not model existing hardware devices and are designed to control synthetic devices.

- Synthetic device support is made available to child partitions using Virtualization Service Clients (VSCs) communicating over the VMBus to Virtualization Service Providers (VSPs) running in the parent partition that controls the hardware

- The main advantage of Plug-in VDevs is that they allow direct communication between the parent and child partition through the VMBus.

- Unlike Core VDevs, Plug-in VDevs do not have access to all of the chipset-level devices and services of the virtual motherboard.

- Plug-in VDevs are implemented as in-process Component Object Model (COM) components to the VM worker process and are only accessible to enlightened operating systems.

- A subset of the Integration Services are Integrated Components. Integrated Components are implemented as system services hosted in the Virtual Machine Integration Component Service (vmicsvc.exe) in child partitions

Plug-in VDev Synthetic Device

1. Systhetic Network Driver Interface: Synthnic.dll

2. Synthetic Storage: Synthstor.dll

Because the Plug-in VDevs are implemented as COM objects, they are dependent upon Distributed COM (DCOM) functionality.

The Integrated Component Plug-in VDevs that are hosted in the virtual machine worker processes on the parent partition are

- Heartbeat: Vmicheartbeat.dll

- Key Value Pair: Vmickvpexchange.dll

- Shutdown VMs : Vmicshutdown.dll

- Time Sync: Vmictimesync.dll

- Volume Shadow Copy Service: Vmicvss.dll

Virtualization Service Providers (VSP’s)

Virtualization Service Providers (VSPs) provide I/O-related resources to a Virtualization Service Client (VSC) running in a child partition.

- A VSP is typically implemented as a kernel-mode component, but may be implemented in user-mode services as well.

- VSPs are hosted on the parent partition and provide a method of publishing device services to other partitions.

- A VSP is the server endpoint of the client/server communication of a device’s functionality. The client endpoint of the client/server communication is handled by the Virtualization Service Client (VSC)

All communication between VSPs and VSCs takes place over the VMBus. Hyper-V includes the following 4 Virtualization Service Providers:

1. Network VSP

2. Storage VSP

3. Video VSP

4. Human Interface Device (HID) VSP

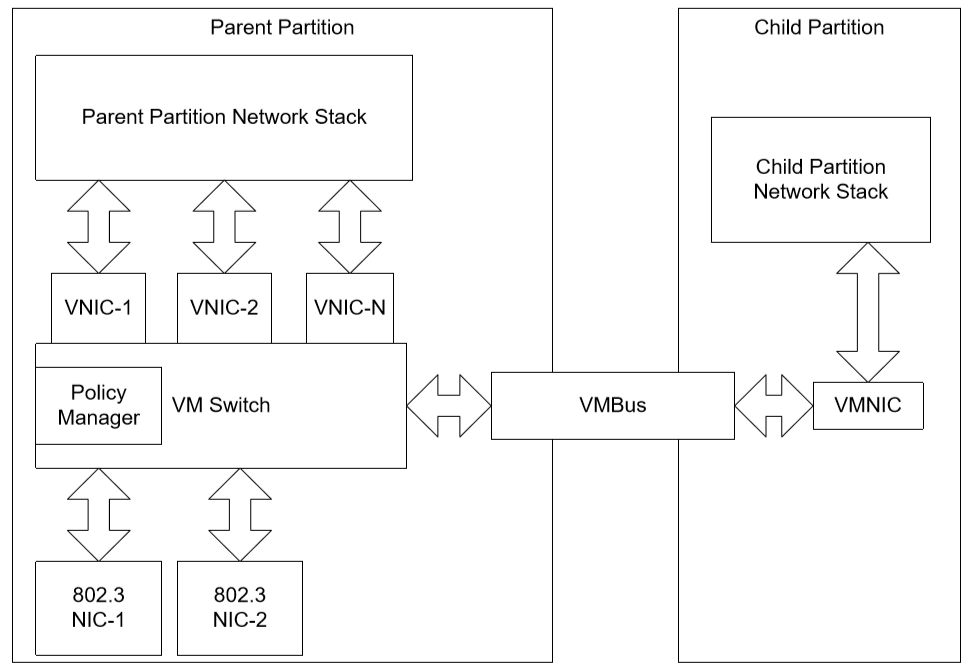

Network VSP

- The Network VSP provides the ability to share a physical network device between the parent partition, which owns the device, and child partitions.

- It also allows the addition of multiple virtual Ethernet adapters (VNICs) to the parent partition.

- The Network VSP is implemented in VMSwitch.sys, an MUX Intermediate Driver (IM), in the parent partition.

{

“The MUX Intermediate Miniport (IM) driver is an NDIS 6.0 driver that demonstrates the operation of an “N:1” MUX driver. The sample demonstrates creating multiple virtual network devices on top of a single lower adapter. Protocols bind to these virtual adapters as if they are real adapters.“

}

- It provides the Microsoft Virtual Network Switch Protocol that can be bound to the physical network adapter.

The corresponding Network Virtualization Service Client (VSC) that is installed in child partitions is the VMNIC, implemented in netvsc60.sys (for Windows Server 2008 and Windows Vista SP1) and netvsc50.sys (for Windows Server 2003 SP2 and Windows XP SP3). VMNIC is an NDIS miniport driver used for creating a virtual Ethernet adapter in the child partition.

Synthetic Network Interface Communication

Network VSP WMI Provider

A Network VSP WMI provider is included with Hyper-V to expose the following virtual network device properties:

1. Description of the network topology

2. Read-only representation of the virtual network switch and its current settings

3. Changeable settings such as connections, VLAN IDs, and bandwidth settings The Network VSP WMI provider is implemented as the Microsoft Hyper-V Networking Management system service (nvspwmi), hosting nvspwmi.dll.

Storage VSP

The Storage VSP is a client of the native storage stack in the parent partition. It provides the ability to share physical storage devices between the parent partition, which owns the devices, and child partitions.

The Storage VSP is implemented in Storvsp.sys in the parent partition. The Storage VSP leverages the SRB server, a parser, and the control interface to support virtual machine storage functionality.

1. SRB Server

The SRB server’s function is to add/remove packets to/from the VMBus. These packets usually contain SCSI request blocks (SRBs) that the client needs.

The SRB server will also handle basic controls that do not require a parser.

2. Parser:

A VSP does not understand image formats on its own so a set of extensible parser drivers are needed to manage the mapping between the virtual disk and physical hardware.

The storage VSP queries each virtual machine at boot to determine the type of storage it has configured and the VSP loads the appropriate parser to translate the format.

There are three kinds of parsers:

1. VHD parser (vhdparser.sys) handles the VHD format.

2. ISO Image parser (isoparser.sys) handles interpreting ISOs as CDrom media.

3. Pass through device parser (passthruparser.sys) handles devices that shared as virtual storage (i.e. direct attached storage).

Control Interface

The control interface provides a means to obtain configuration Input/Output controls (IOCTLS) sent from user mode clients when the Storage VSP starts.

The corresponding Storage Virtualization Service Client (VSC) that is installed in child partitions is implemented in storvsc.sys.

The Storage VSC is only installed in a child partition when a SCSI controller is added to the child partition. Otherwise, the legacy IDE controller is used for child partition storage.

Important:

The only time that Hyper-V mounts a child partition VHD in the parent partition is when compacting a virtual hard disk using the Compact feature available in the Edit Virtual Hard Disk Wizard. The Convert and Expand virtual hard disk features do not mount the child partition VHD in the parent partition.

Start type for storvsc is changed to 3 (manual) from 4 (Disabled)

Storage VSP WMI Provider

A Storage VSP WMI provider is included with Hyper-V to expose the following virtual storage device properties and methods:

1. Read-only properties that describe the virtual hard disks, floppy disks, and CD/DVD disks, such as name, location, size, and type

2. Read/write property for specifying the parent of a differencing hard disk

3. Methods for operating on the virtual hard disk, such as converting, compacting, and merging

The Storage VSP WMI provider is implemented as the Microsoft Hyper-V Image Management system service (vhdsvc), hosting vhdsvc.dll.

It executes in a shared service host process (svchost.exe), using the virtsvcs identifier

Video VS

- The Video VSP, implemented in the Virtual Machine Worker Process, is provided to obtain higher-performance 2D graphics output for a child partition

- The Video VSP communicates with the VMBus Video Device (VMBusVideoM.sys) VSC on a virtual machine with Integration Services installed.

- Windows Server 2008 clients: Windows Display Driver Model (WDDM) SynthVid driver

- Windows 2000 and Windows Server 2003 clients: Windows XP Display Driver Model (XDDM) SynthVid driver

Human Input Device (HID) VSP

The Human Interface Device (HID) VSP, implemented in the Virtual Machine Worker Process, provides mouse and keyboard device performance enhancements for a child partition.

The HID VSP communicates with the VMBus HID Miniport driver (VMBusHID.sys) VSC on a virtual machine with Integration Services installed.

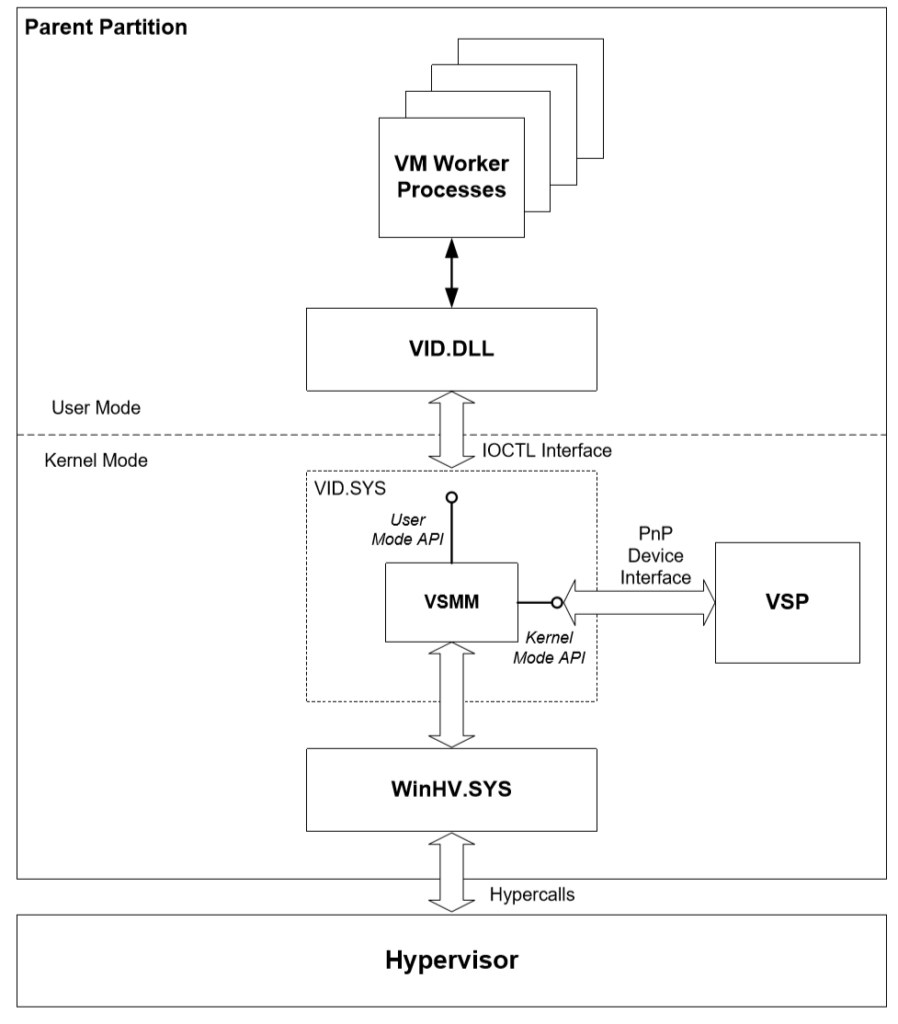

Virtualization Infrastructure Driver (VID)

The VID provides partition management services, virtual processor management services, memory management services for partitions.

It also provides a way for user mode virtualization stack components to communicate with the hypervisor (Virtual machine Worker Process)

The Virtualization Infrastructure Driver is implemented in Vid.sys

A user mode library, Vid.dll, is used to facilitate communication with user mode processes such as the Virtual Machine worker process.

Partition Management

When creating and managing virtual machine partitions, the Virtualization Infrastructure Driver acts as a pass through layer from the hypervisor to the user mode virtualization stack.

The VID performs the following partition management tasks:

- Create and Delete child partitions

- Start and stop child partitions

- Save and restore the state of a running child partition

- Modify the CPU resource allocation of a child partition

- Ability to communicate with hypervisor via the Synthetic Interrupt Controller (SynIC)

Virtual Processor Management

The Virtualization Infrastructure Driver also acts as a pass through layer for virtual processors from the hypervisor to the user mode virtualization stack.

The VID performs the following virtual processor management tasks:

- Set and change the number of virtual processors in a partition while the partition is in an inactive state

- Start and stop individual virtual processors

- Read and write the state of a virtual processor while the virtual processor is in a suspended state.

Virtual Stack Memory Manager (VSMM)

To facilitate VSMM’s management of a child partition’s memory, it utilizes the hypervisor’s services by communication with the hypervisor using hypercall APIs through WinHv.sys.

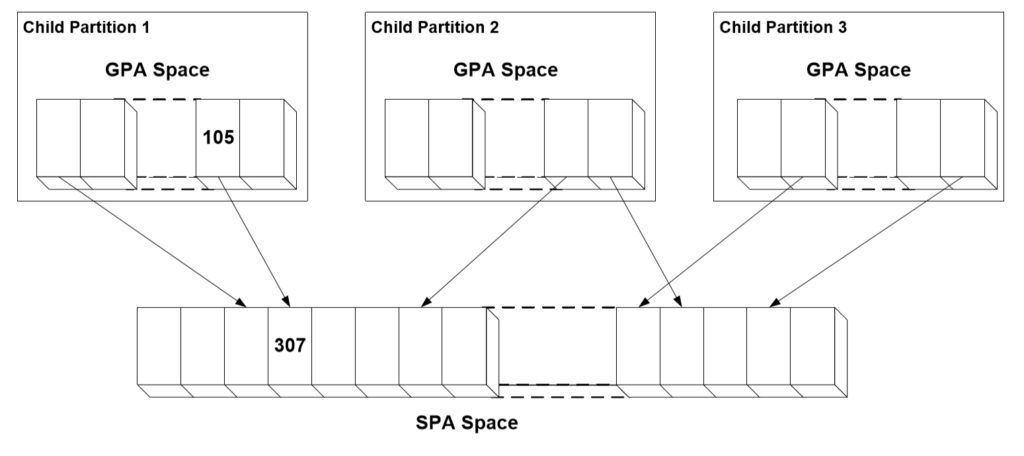

The physical memory that is seen by the hypervisor is called System Physical Address (SPA) space.

The pages allocated for the operating system in a child partition are not necessarily contiguous so a remapping takes place to allow the guest to see a contiguous Guest Physical Address (GPA) space.

System Physical Address space refers to the physical memory’s physical addresses. Guest Physical Address space is the set of pages that are accessed when a guest references a physical address

There is one SPA space per machine and one GPA space per child partition

When the operating system is running within a child partition using Hyper-V, the guest page tables reference GPA, although, as far as the child partition operating system knows, this is physical memory. Even though the guest references a GPA page when referring to physical memory, these references have to be converted so the actual memory access is performed on an SPA page.

The following figure shows three child partitions, each having its own GPA space. The pages in each GPA space point to SPA pages. For example, when Partition A executes an instruction that accesses memory in GPA page number 105, the underlying page that will be accessed is SPA page number 307. The hypervisor uses and keeps track of the GPA – to SPA mappings, but the Virtual Stack Memory Manager creates the mappings.

Guest Physical Address to System Physical Address mapping

Note that most child partition instructions do not directly reference GPA addresses. Instead, they reference Guest Virtual Addresses (GVA). Guest Virtual Addresses are the virtual address space that the child partition operating systems and applications “see” and refer to.

For operating systems running on physical hardware (i.e. not virtualized), virtual to physical address translation is done by the processor using page tables provided by the operating system. The hypervisor must ensure that GVA to SPA translation is performed (rather than GVA to GPA), so that an actual SPA page is referenced. The algorithm used by the hypervisor for performing these translations is known as ATC (Address Translation Control).

The hypervisor maintains a shadow page table for each partition to translate Guest Virtual Addresses to System Physical Addresses.

The only responsibility of the VSMM in this process is to provide the hypervisor with the GPA-to-SPA mappings for a child partition.

Physical Memory Reservation for the Parent Partition

A portion of physical memory may be reserved for use by the parent partition, preventing the specified amount from being available to child partitions. This is to help ensure that the parent partition’s stability is not compromised by child partition memory allocations.

This behavior is controlled by the RootMemoryReserve REG_DWORD value in the following registry key:

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows NT\CurrentVersion\Virtualization

Default value: 20(h) or 32(d) (Megabytes)

Maximum value: 400(h) or 1024(d) (i.e. 1GB)

When the VMMS receives the SERVICE_CONTROL_PRESHUTDOWN event notification to inform it that a physical machine shutdown is in progress, it starts a thread to perform the specified shutdown action on all of the active virtual machines.

The parent partition memory reserve feature may be disabled by setting the RootMemoryReserve value to 0

Virtualization Service Clients (VSCs)

Virtualization Service Clients (VSCs) are synthetic device instances that reside in a child partition and utilize hardware resources that are provided by Virtualization Service Providers (VSPs) in the parent partition.

- They communicate with the corresponding VSPs in the parent partition over the VMBus to satisfy a child partitions device I/O requests.

- A VSC is instantiated when a child partition subscribes to a VSP that in the parent partition.

- VSCs are available for installation, or automatically installed, when Integration Services are installed in a child partition, allowing the child partition to utilize synthetic devices.

- Without Integration Services installed, the child partition can only utilize emulated devices.

The following Virtualization Service Clients are available in child partitions after installing integration Services

1. Networking – implemented in netvsc60.sys or netvsc50.sys, depending upon the operating system in the child partition.

2. Storage – implemented in storvsc.sys.

3. Video – implemented in vmbusvideom.sys.

4. Human Interface Devices (HID) – implemented in vmbushid.sys.

Integration Services

Integration services also provide the components that allow child partitions to communicate with other partitions and the hypervisor

Location of Integration Services:

%systemroot%\System32\VMGuest.iso

When the “Insert Integration Services Setup Disk” Action menu item is selected in the Virtual Machine Connection client application (vmconnect.exe), the worker process for the virtual machine attaches vmguest.iso to the virtual machine’s CDROM drive, utilizing isoparser.sys and storvsp.sys.

Integration Services consist of the following components:

winhv.sys

netvsc50.sys

vmbushid.sys

IcCoinstall.dll

vmbus.sys

netvsc60.sys

vmicsvc.exe

vmbuspipe.dll

storvsc.sys

s3cap.sys

vmictimeprovider.dll

VmdCoinstall.dll

storflt.sys

vmbusvideom.sys

vmbusvideod.dll

The following Integration Components are also installed with Integration Services:

Heartbeat : Hyper-V Heartbeat Service

Shutdown : Hyper-V Guest Shutdown Service

Key/Value Pair Exchange : Hyper-V Data Exchange Service

Volume Shadow Copy Service (VSS) : Hyper-V Volume Shadow Copy Requestor

Time Synchronization : Hyper-V Time Synchronization Service

These components are installed as system services, and implemented in vmicsvc.exe, in compatible operating systems in child partitions.

Key/Value Pair Exchange

The Key\Value Pair Exchange integration component takes a key value pair specified in the parent partition and stores it in the operating system in a child partition. Or, it obtains a key value pair from the child partition and exposes it to the parent partition.

There are four standard key value pairs stored in the operating system in the child partition in the following registry key:

HKLM\Software\Microsoft\Virtual Machine\Guest\Parameters

Standard Key Value Pairs

HostName : The name of the Domain Name System (DNS) domain assigned to the Parent operating system.

PhysicalHostName : The non-fully qualified name of the Parent partition

PhysicalHostNameFullyQualified : The fully qualified name of the Parent partition

VirtualMachineName : The name of the virtual machine used by the virtualization stack

VMBus Architecture

The VMBus is a channel-based communication mechanism used for inter-partition communication and device enumeration on systems with multiple active virtualized partitions.

The VMBus creates and manages ring buffers between partitions. A ring buffer is essentially a data structure that uses a fixed-size buffer that removes elements on first-in, first-out (FIFO) basis when it becomes full. These ring buffers are used to transfer data or commands between partitions using two endpoints, a server and a client.

Guest operating systems that do not support Integration Services still communicate with the parent partition through the hypervisor. Since, however, they are not hypervisoraware, the hypervisor intervenes to intercept calls to the physical hardware from these guests and route them to the parent partition. These calls require more overhead for processing than communication using the VMBus.

The VMBus is comprised of the following components:

VMBus.sys – driver that implements the main functionality of the VMBus.

VmBusPipe.dll –user mode component loaded in the VM Worker Process that opens a VMBus channel to facilitate sending or receiving data through the VMBus.

The VMBus does not provide a mechanism for partitions to directly communicate with the hypervisor but it does rely on the hypervisor to establish the communication channels between partitions. Communication between partitions and the hypervisor is performed using hypercalls through WinHv.sys as discussed previously.

When viewing the properties of VMBus in Device Manager, you will see that WinHv.sys is also listed as an included component. Although WinHv.sys is loaded along with the VMBus driver and DLL, it is not a part of VMBus.